Modern enterprises face a critical operational challenge: data fragmentation across mission-critical business systems creates latency, inconsistencies, and engineering overhead that compromises operational efficiency. Operational data is fragmented across a distributed stack of best-of-breed applications.

Your sales team lives in a CRM like Salesforce, your finance department operates out of an ERP, and your product data resides in a production database like PostgreSQL. This separation creates data silos, a critical technical problem that leads to operational inefficiencies, manual reconciliation errors, and poor decision-making. When data is inconsistent or delayed, business operations can grind to a halt.

The enterprise data integration market is rapidly expanding, projected to grow from $15.22 billion in 2025 to over $30.17 billion by 2033. However, selecting the wrong integration architecture can result in brittle pipelines, silent failures, and significant maintenance overhead that diverts engineering resources from core business value.

Data integration tools are specialized software solutions that automate the process of combining data from multiple sources into a unified, coherent view. These tools act as digital bridges between different data sources, transforming information into compatible formats while ensuring data quality and consistency. [1]

The technical challenge extends beyond simple data movement. In modern enterprise architecture, data is fragmented across a multitude of operational systems, including CRMs, ERPs, and databases. This fragmentation creates data silos, leading to technical inefficiencies such as data inconsistency, high latency, and a lack of a unified view of business operations. For engineering and data teams, the challenge is to ensure that these disparate systems remain aligned in real-time to support mission-critical processes.

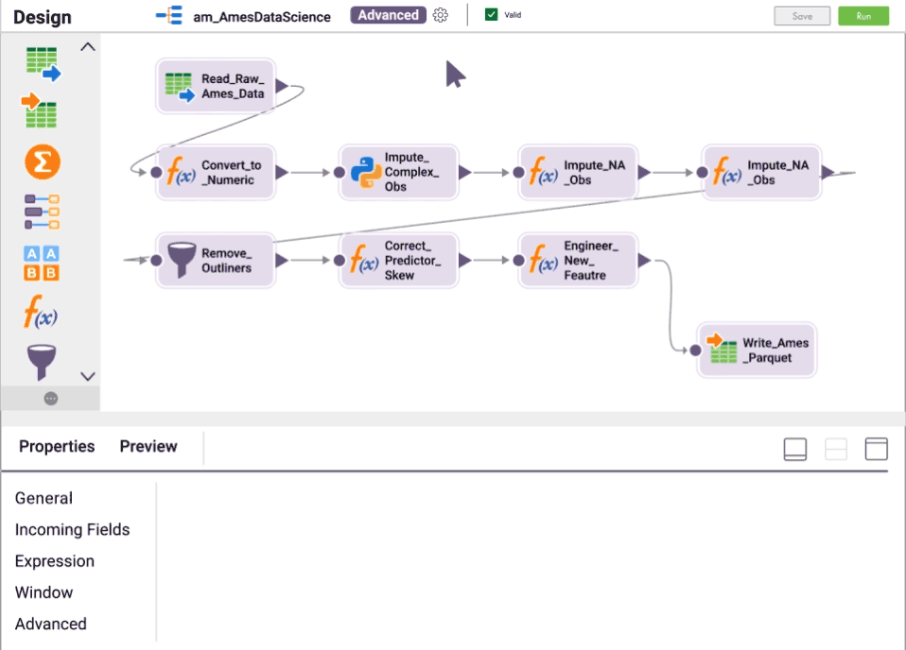

Modern platforms operate through sophisticated architectures designed for different technical requirements:

The Operational Sync Problem: Traditional ETL/ELT tools and generic iPaaS platforms fail to address the fundamental requirement for real-time operational data consistency. This addresses a distinct and critical technical challenge: maintaining data integrity across live operational systems. When a sales representative updates a deal in Salesforce, that change must be reflected instantly in NetSuite and your production PostgreSQL database. This is not an analytics problem; it is an operational imperative.

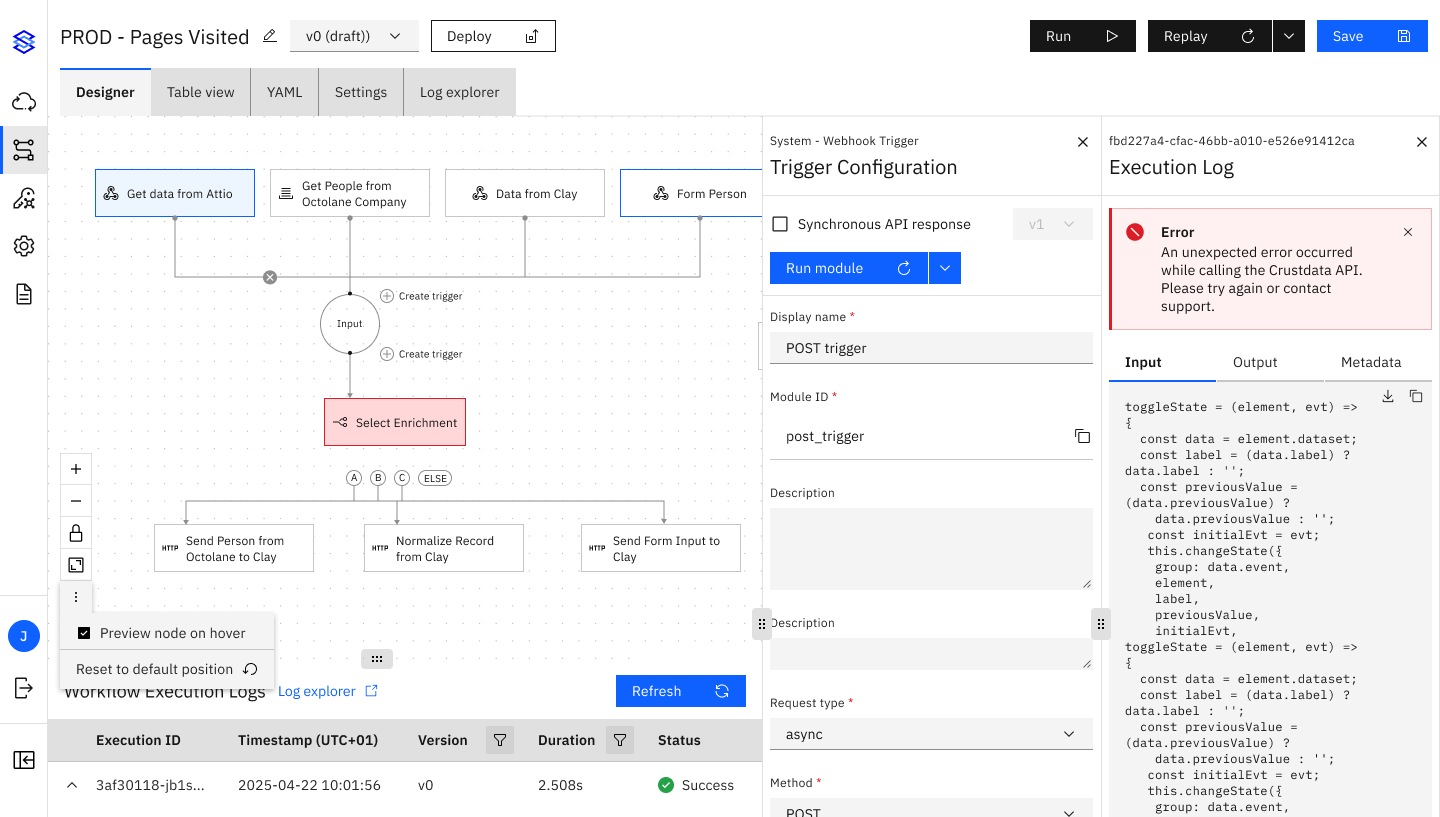

The Purpose-Built Solution: Stacksync is engineered to solve the specific technical challenge of real-time, bi-directional synchronization at scale. Unlike analytics-focused tools that prioritize one-way data movement to warehouses, Stacksync provides true bi-directional sync between operational systems, eliminating data latency and engineering overhead.

Key Features:

Technical Advantages: Transforms integration from a high-maintenance liability into a reliable utility, enabling teams to build on a consistent and real-time data foundation. Eliminates the complexity of managing API changes, rate limits, and error handling that plague custom integration approaches.

Why Choose Stacksync? For organizations requiring guaranteed operational data consistency across mission-critical business systems, Stacksync provides the only purpose-built architecture that eliminates data latency while maintaining engineering efficiency.

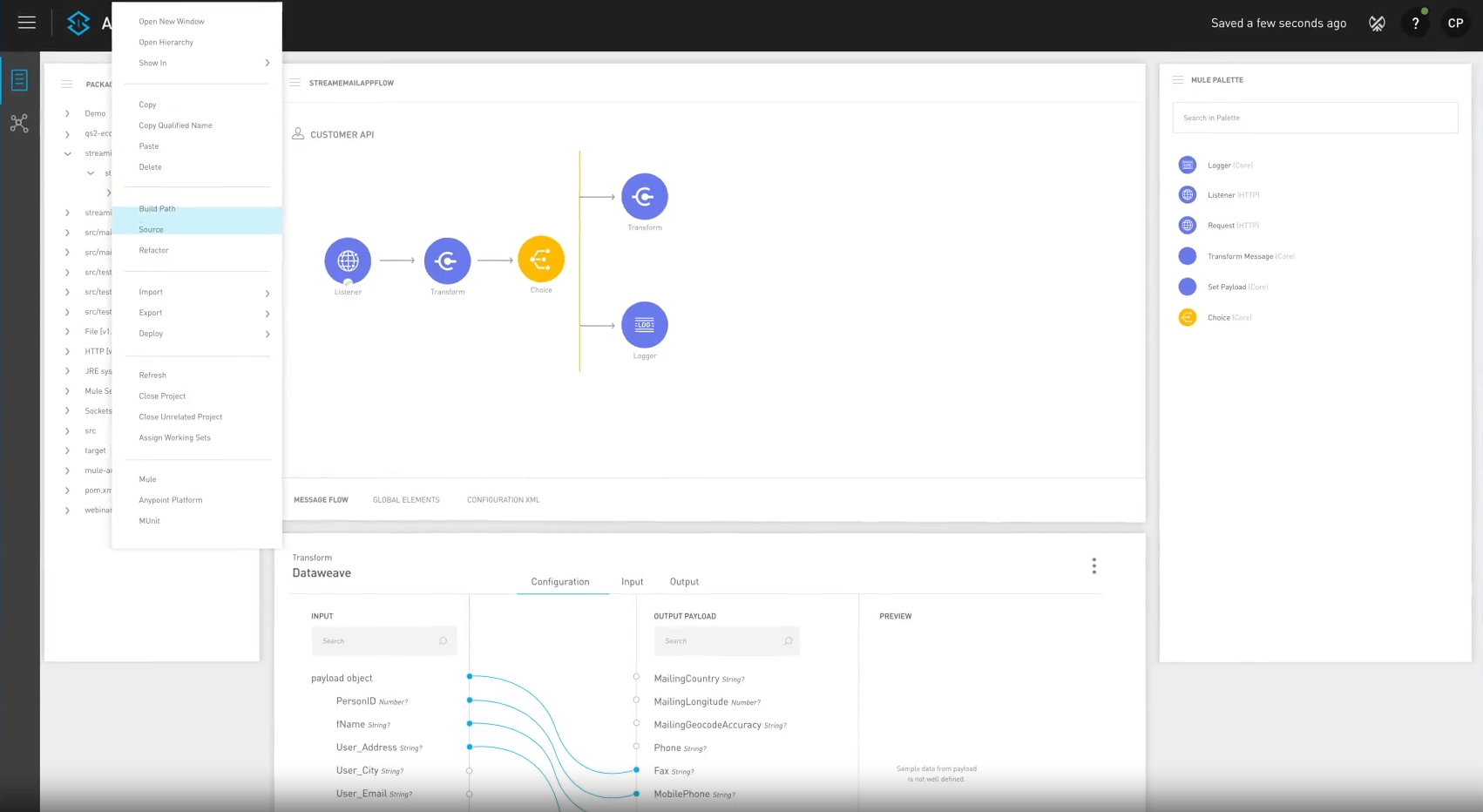

MuleSoft Anypoint Platform is a hybrid integration platform that excels at API management and connecting cloud and on-premise systems. It is a powerful but complex solution best suited for large enterprises building an application network.

Key Features:

Limitation: While powerful, these platforms often rely on batch processing and require specialized developers and long implementation cycles. They are overkill for many mid-market companies and are not purpose-built for real-time, bi-directional operational sync.

Informatica is a leader in enterprise cloud data management. It offers a comprehensive suite of data integration tools suitable for large-scale, complex data environments. Informatica's Intelligent Data Management Cloud (IDMC) platform's AI-driven automation provides end-to-end capabilities for data integration, quality, governance, and cataloging.

Key Features:

Best For: Large enterprises requiring comprehensive data management with extensive governance features.

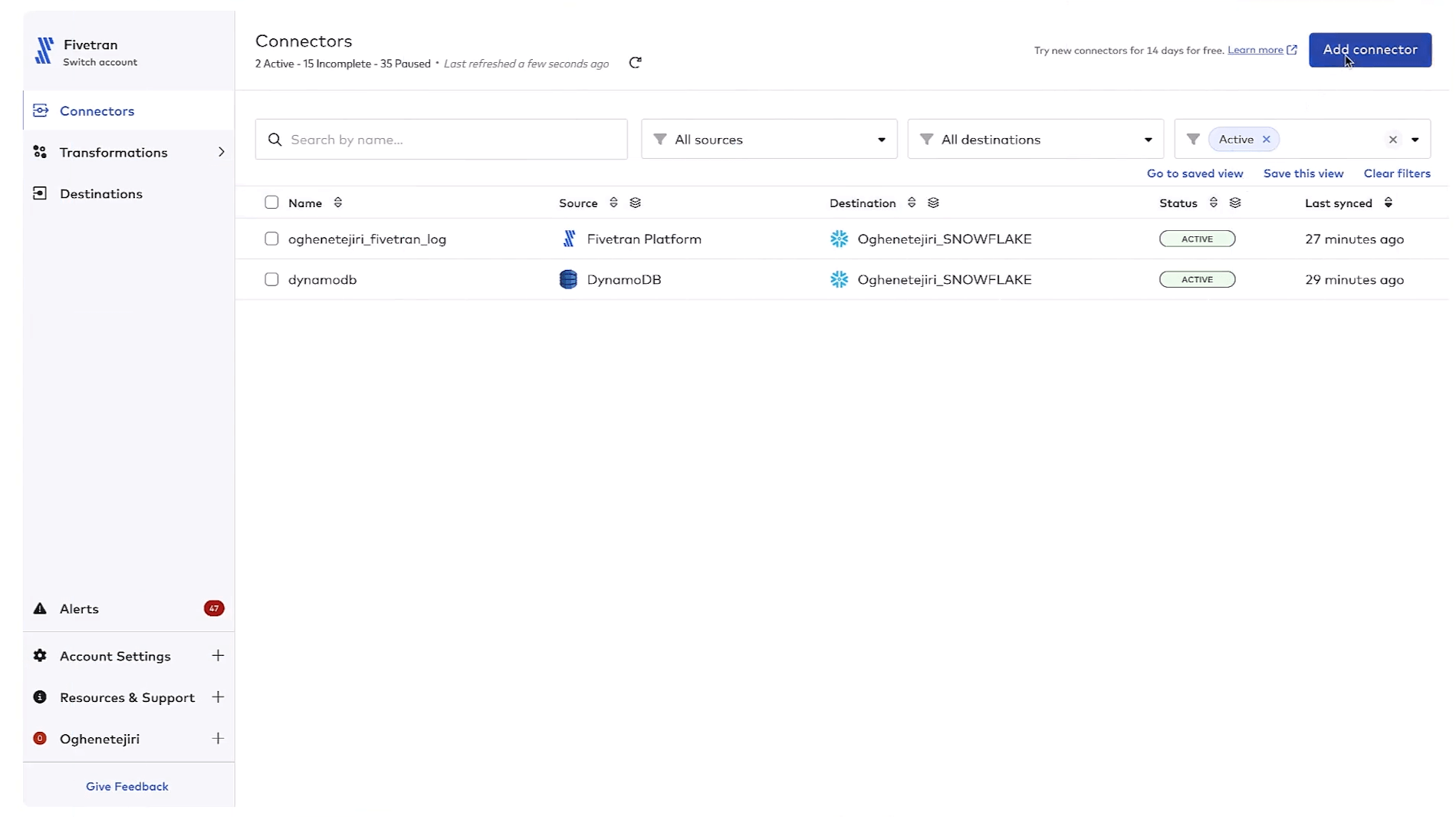

Fivetran is a cloud-native, fully managed data integration platform that specializes in automating the extract and load processes of ELT. It's known for its "zero-maintenance pipelines," aiming to simplify data integration by handling the complexities of data extraction and loading. Fivetran's approach is a good fit for companies looking for a hands-off solution to keep their data warehouse continuously updated.

Key Features:

Limitation: These tools are fundamentally one-way streets. They are engineered to push data to a destination, not to keep multiple operational systems in a state of perpetual, two-way sync.

Talend is one of the most mature enterprise data integration tools on the market, with both open-source and commercial offerings. [3] Unified platform that integrates data management, data quality, and application integration in a single suite. Based on open-source technologies, allowing for flexibility and community-driven innovations.

Key Features:

Complexity Issue: Its power comes with significant complexity. Setting up real-time, bi-directional sync in Talend often requires considerable technical expertise and configuration, as it is a general-purpose platform rather than a specialized sync tool.

The SnapLogic Intelligent Integration Platform uses a drag-and-drop pipeline builder with over 600+ pre-built connectors. It's designed to support a range of integration needs – from traditional ETL-style data integration for analytics to real-time application integration – all with minimal coding.

Key Features:

Best For: Businesses requiring quick integrations and a lightweight platform for connecting cloud apps and services. [6]

Workato's iPaaS solution focuses on process automation and collaboration between IT and business through an intuitive, AI-assisted user experience.

Key Features:

Best For: Organizations seeking to combine data integration with sophisticated workflow automation.

Azure Data Factory (ADF) is a fully managed, cloud-native data integration and orchestration service within the Microsoft Azure ecosystem. ADF is excellent for orchestrating data movement within Azure and connecting to various on-premises and cloud sources. However, it functions primarily as a one-way ETL/ELT tool and is not suited for real-time, bi-directional synchronization between operational applications.

Key Features:

Best For: Azure-first teams with extensive integrations across the Microsoft ecosystem. [3]

Oracle Data Integrator (ODI) is a powerful data integration tool that excels in enterprise-grade data integration and optimized ETL processes for Oracle environments. It offers native support and deep integration with Oracle databases, resulting in optimized performance and efficient data-loading processes.

Key Features:

Best For: Medium to large enterprises across industries that require a powerful and scalable data integration solution. [8]

Dell Boomi provides a cloud-native integration platform that combines simplicity with enterprise capabilities.

Key Features:

Best For: Organizations requiring flexible deployment options and user-friendly integration capabilities that can scale from simple connections to complex enterprise architectures.

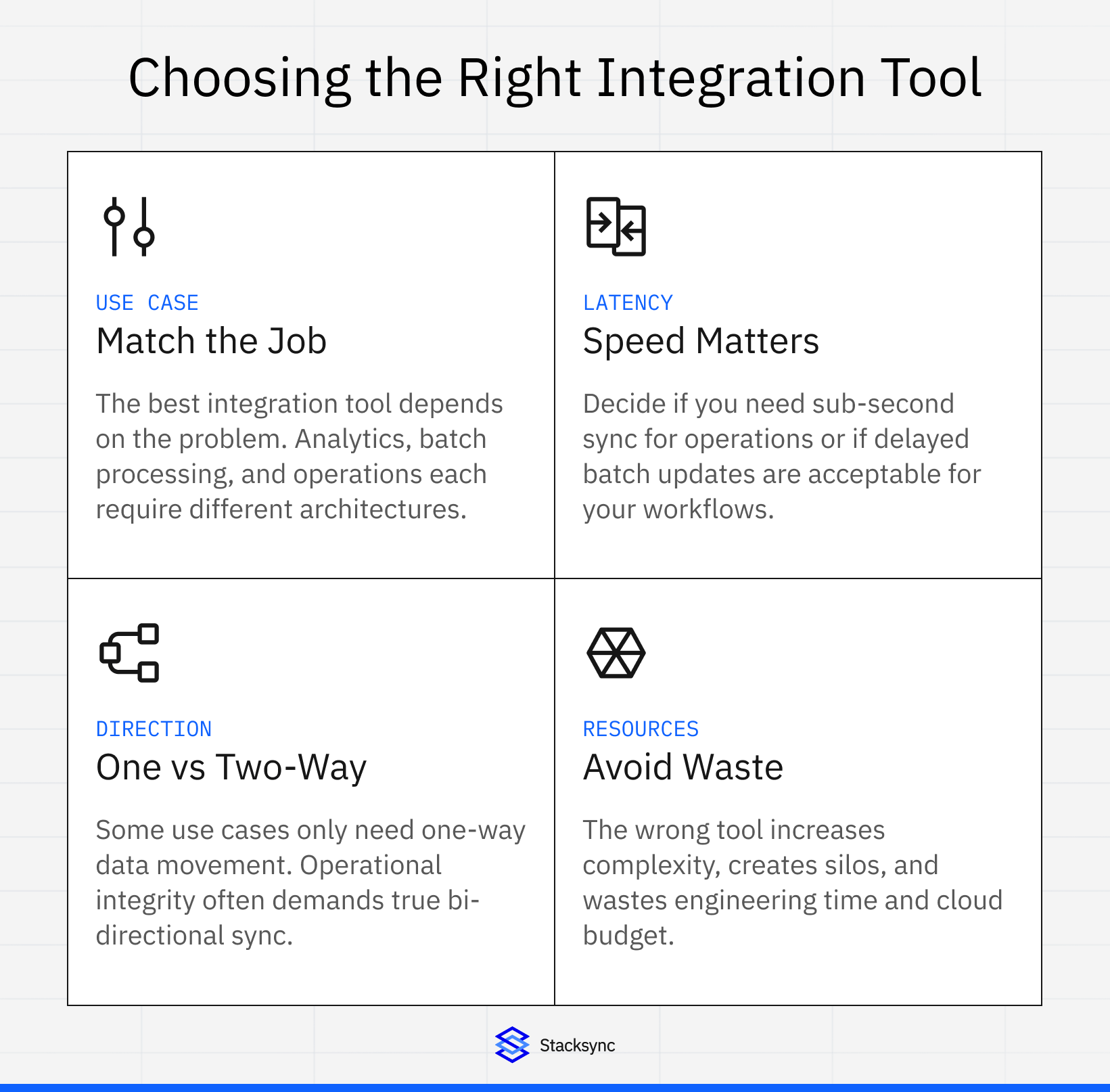

Understanding architectural approaches is critical for technical selection:

Platforms in this category excel at one primary function: reliably moving data from source systems into a central data warehouse or lake for analytics. These solutions optimize for analytics but have fundamental limitations for operational synchronization.

These platforms are built for large enterprises with complex, high-volume requirements and the resources to manage them. They provide comprehensive transformation capabilities but often require specialized expertise and extended implementation cycles.

While ELT tools build data highways to a single destination (the warehouse), Stacksync and similar platforms maintain real-time consistency across multiple operational systems simultaneously.

The shift towards real-time data processing is unmistakable. Tools are increasingly focusing on minimizing latency in data transmission between sources, a reflection of the growing need for immediate insights in decision-making processes. [7]

A low-code integration platform allows users to build, automate, and manage data pipelines using visual interfaces and optional coding. These platforms make it faster and easier for data engineers, analysts, and business users to integrate data across systems without writing complex scripts, accelerating time-to-insight and improving collaboration.

Automated data integrations significantly boost operational efficiency by saving time and reducing manual effort. They also enhance data accuracy and consistency, ensuring that all your systems are working with the most current and correct information. This real-time synchronization helps in making informed decisions faster.

For any tool handling business-critical data, enterprise-ready security is non-negotiable. Look for compliance with standards like SOC 2, GDPR, and HIPAA, along with robust features for data encryption, access control, and secure connectivity.

Analytics-Focused Tools (Fivetran, Informatica, Talend):

Operational Sync Platforms (Stacksync):

Building a sync engine in-house offers complete control but comes at a significant cost. This approach requires extensive development resources to build and, more importantly, maintain. Engineering teams become responsible for managing API changes, rate limits, pagination, error handling, and monitoring—a constant and brittle maintenance burden that distracts from core business objectives.

Automation minimizes the risk of human error, which can lead to data discrepancies and potential business disruptions. By automating routine data tasks, your team can redirect their focus towards more strategic initiatives that add value to your business, driving innovation and growth. [4]

Modern platforms implement sophisticated validation, conflict resolution, and error handling mechanisms that ensure data integrity across connected systems.

The "best" data integration tool is the one engineered for your specific problem. A platform designed for analytics is not suited for operational sync, and vice versa.

Focusing on operational integrity is paramount for engineering teams building a reliable and scalable data ecosystem. By solving the core problem of data consistency between CRMs, ERPs, and databases, platforms like Stacksync provide the stable foundation for all data-driven initiatives, from analytics to automation.

The enterprise data integration landscape in 2026 presents distinct architectural choices. Traditional ETL/ELT platforms excel at analytics-focused data movement but fail to address operational requirements. These tools save tremendous time by eliminating manual data entry, drastically reducing the risk of errors, and ensuring that data across different systems is always accurate and up-to-date. This leads to better, faster decision-making and significantly improves operational efficiency. [4]

For organizations prioritizing operational data consistency with minimal engineering overhead, Stacksync's purpose-built bi-directional synchronization platform eliminates data fragmentation across mission-critical business systems. Traditional platforms remain viable for comprehensive transformation requirements, but their complexity makes them unsuitable for teams requiring rapid deployment and guaranteed operational reliability.

Ready to eliminate data silos and achieve true operational data consistency? Explore Stacksync's real-time bi-directional sync capabilities and transform your integration strategy from a maintenance burden into a competitive advantage.